Conversational AI chatbots like ChatGPT have become all the rage recently. ChatGPT’s ability to generate remarkably human-like responses to natural language prompts has captured people’s imaginations.

It has also raised concerns about the potential misuse of the technology. This is where tools like the Chat GPT Sandbox come in.

Table of Contents

What is ChatGPT and How Does it Work?

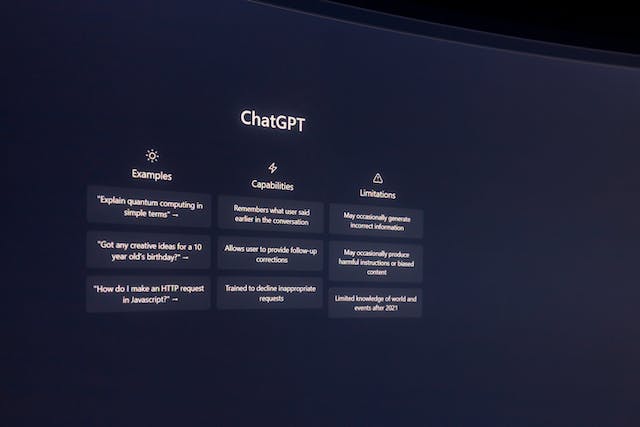

ChatGPT is a large language model developed by Anthropic and based on the GPT-3 architecture. It is trained on massive datasets of text from books, websites, and more to generate responses to text-based prompts.

Unlike more rigid chatbots, ChatGPT does not rely on rules and scripts. Instead, it uses deep learning techniques to produce remarkably fluent and coherent responses.

The Need for Responsible AI Practices Like Sandboxing

Despite ChatGPT’s impressive capabilities, the model has some key limitations. It can sometimes generate biased, unreliable, or harmful content without the right human guidance. Unconstrained access also raises concerns about misinformation and system abuse.

This is why Anthropic developed the Chat GPT Sandbox – a controlled testing environment designed to make AI systems like ChatGPT more beneficial while limiting potential downsides.

Key Benefits of the ChatGPT Sandbox

- Reduces harmful responses: Sandboxing allows for greater detection and filtering of problematic content.

- Enables safe testing: Researchers can experiment safely with minimal risk.

- Gathers useful feedback: User input provides data to refine the model.

- Promotes responsible AI: Overall, sandboxing encourages accountability in AI development.

Current State of the Chat GPT Sandbox

The Chat GPT Sandbox is currently available as an invite-only beta to researchers, developers, and other qualified testers. It provides access to a specially constrained version of the ChatGPT model intended for testing purposes only.

Key things to know about the current sandbox:

- Access is limited to reduce harmful use while getting feedback.

- There are strict limits on prompt length, response length, and usage.

- The model is intentionally muted on certain controversial topics.

- Anthropic gathers data from the sandbox to improve ChatGPT.

The Future Possibilities of ChatGPT Sandboxing

Looking ahead, the Chat GPT Sandbox could have some exciting possibilities:

- Wider access – Opening the sandbox to more testers could accelerate innovation.

- Integration – Sandboxed ChatGPT could be safely integrated into more applications.

- New capabilities – Testing may uncover new beneficial use cases.

- Commercial viability – Sandboxing could make AI chatbots viable products.

The Chat GPT Sandbox represents an important step towards developing AI responsibly. With the right constraints and testing, powerful models like ChatGPT can be used broadly to enrich people’s lives.

Chat GPT Sandbox: Testing Responsible AI

The recent explosion in popularity of AI chatbots like ChatGPT has been thrilling to witness. It has also stimulated serious discussions around the responsible development and deployment of such powerful AI systems.

This is where a tool like Anthropic’s Chat GPT Sandbox comes into play. In this post, we’ll explore what the Chat GPT Sandbox is, how it works, its current and future possibilities, and why sandboxing is important for creating safe and beneficial AI chatbots.

Understanding ChatGPT and Its Impressive Capabilities

ChatGPT is a conversational AI system created by Anthropic, PBC, and based on OpenAI’s GPT-3 architecture.

Since its launch in November 2022, it has enthralled people with its ability to generate remarkably human-sounding responses to natural language prompts on nearly any topic imaginable.

Unlike rigid chatbots that rely on scripts, ChatGPT demonstrates advanced natural language processing (NLP) that allows it to parse prompts and produce fluid and coherent responses. This is thanks to its training on massive troves of text data using deep learning techniques like transformer networks.

The Double-Edged Sword of AI Chatbots

ChatGPT’s impressive capabilities also come with risks if deployed irresponsibly. As an AI system, it lacks human judgment and can potentially generate harmful, biased, or factually incorrect information without proper constraints. Unfiltered access also raises valid concerns about the propagation of misinformation and system abuse.

This underscores the need for judicious development and testing of systems like ChatGPT before broad release. Responsible sandboxing is one approach that allows both freedom to innovate and necessary safeguards.

Conclusion

The Chat GPT Sandbox represents a promising development – a safe space to test and refine AI chatbots to minimize risks and maximize benefits before full-scale release. As AI systems grow more powerful, responsible testing and deployment tools like sandboxes will only grow in importance. They allow freedom to innovate paired with necessary caution and oversight.

Done right, the Chat GPT Sandbox could accelerate progress towards AI chatbots that enhance our world in broadly positive ways. It provides both a peek into the amazing possibilities of AI and a reminder that we must guide its growth thoughtfully.

If you have the chance to experiment with the Chat GPT Sandbox, take time to provide constructive feedback. Together we can cultivate the responsible, beneficial AI systems that our world needs.

FAQs

What is the ChatGPT Sandbox?

The ChatGPT Sandbox is a constrained testing environment created by Anthropic to allow for responsible experimentation with and refinement of the ChatGPT conversational AI model before any potential wider release.

Who can currently access the ChatGPT Sandbox?

Access is currently limited to selected researchers, developers, corporations, and other qualified beta testers invited directly by Anthropic.

How does sandboxing make AI chatbots like ChatGPT safer?

Sandboxing allows guardrails to be placed around an AI system, like limiting responses, filtering certain topics, and muting risky outputs. This enables testing benefits while reducing potential harms.

What are some future possibilities for the ChatGPT Sandbox?

If successful, the sandbox could enable wider access, integration into applications, the discovery of new safe use cases, and ultimately the responsible commercialization of AI chatbots like ChatGPT.

Why is responsible sandboxing important for the development of AI systems?

Sandboxing enables transparent, accountable AI development that builds public trust. It allows AI’s benefits to emerge while risk is contained, paving the way for broadly valuable AI.